(ITUNES OR LISTEN HERE)

Reading papers is hugely important but learning how to read medical literature is an entirely different realm and many of us have statsphobia.

The Free Open Access Medical Education (FOAM)

The Bread and Butter

Kappa – a coefficient indicating the degree of inter-rater reliability. How reliability are people getting the same result for a certain test or evaluation?

- For example, you would want two people looking at the same chest x-ray to agree on the presence or absence of an infiltrate. Sometimes, chance comes into play and kappa tries to account for this. Similarly, clinical decision aids are often comprised of various historical and physical features. It would be nice if different clinicians evaluating the same patient would turn up the same results (thereby yielding the decision aid consistent and reliable).

To see how to calculate kappa, check this out.

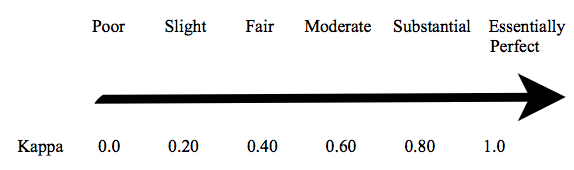

The value of kappa ranges from -1 (perfect disagreement that is not due to chance) to +1 (perfect agreement that IS due to chance). A value of 0 means than any agreement is entirely due to chance [1-2].

People debate over what a “good” kappa is. Some say 0.6, some say 0.5 [1-2]. In the PECARN decision aid, for example, the authors only included variables with a kappa of 0.5 [3-4].

Limitations:

- Prevalence – if prevalence is high, chance agreement is also high. Kappa takes into account the prevalence index; however, raters may also be predisposed to not diagnose a rare condition, so that the prevalence index provides only an indirect indication of true prevalence, altered by rater behavior [6].

- The raters – agreement may vary based on rater skill, experience, or education. For example, when PECARN variables were looked at between nurses and physicians, the overall kappa for “low risk” by PECARN was 0.32, below the acceptable threshold as this number suggests much of the agreement may be due to chance [6].

- Kappa is based on the assumption that ratings are independent (ie a rater does not know the category assigned by a prior rater).

References

1. Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005;37:(5)360-3.

2. McGinn T, Wyer PC, Newman TB, et al. Tips for teachers of evidence-based medicine: 3. Understanding and calculating kappa. CMAJ. 2004;171 (11)

3.Kuppermann N, Holmes JF, Dayan PS, et al. Identification of children at very low risk of clinically-important brain injuries after head trauma: a prospective cohort study. Lancet. 2009;374:(9696)1160-70. [pubmed]

4. Gorelick MH, Atabaki SM, Hoyle J, et al. Interobserver agreement in assessment of clinical variables in children with blunt head trauma. Acad Emerg Med. 2008;15:(9)812-8.

5. Nigrovic LE, Schonfeld D, Dayan PS, Fitz BM, Mitchell SR, Kuppermann N. Nurse and Physician Agreement in the Assessment of Minor Blunt Head Trauma. Pediatrics. 2013.

6. de Vet HC, Mokkink LB, Terwee CB, Hoekstra OS, Knol DL. Clinicians are right not to like Cohen’s κ 2013;346:f2125

One thought on “FOAMcastini – Kappa”

Comments are closed.